Some of the articles focused just on theory, while others offer practical code-based solutions in a variety of programming languages. There are many useful blog posts on the topic, which helped me slowly understand how the algorithm worked, so I could then find a practical way to implement it in DAX. Variations of the HLL algorithm are used by companies such as Google, Facebook and Twitter to help them calculate, analyse and understand their massive datasets more efficiently. The idea behind HLL is to quickly produce a cardinality estimate over a big dataset without consuming a large amount of memory.

My initial research for a pattern quickly let me to the HyperLogLog (HLL) algorithm, which looked to be what I was after.

#Ssas tabular distinct count crack#

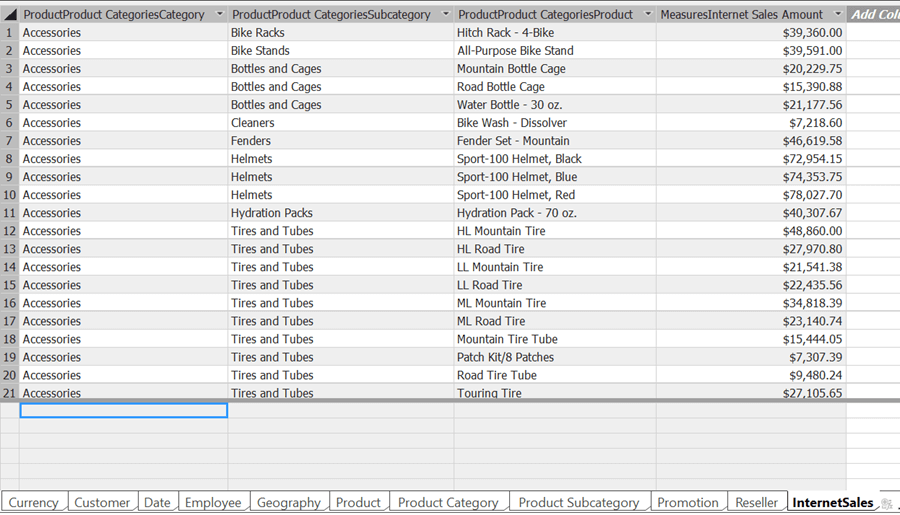

Still, I wanted to see if I could replicate the function inside a data model – so had a crack at building a DIY version of APPROX DISTINCT COUNT function in DAX. This DAX function works if you are already using Direct Query with one of these data sources. The function cannot be tuned and simply makes use of the APPROX_COUNT_DISTINCT function in TSQL. The catch with the APPROXIMATEDDISTINCTCOUNT function is it only works in Direct Query mode over an Azure SQL DB, or Azure SQL DW. In December 2018, we introduced a new DAX function called APPROXIMATEDDISTICNTCOUNT which, as the name suggests, doesn’t return a precise result instead, it takes some mathematical shortcuts to return a result faster using less CPU and memory. There are plenty of other reporting scenarios where you might prefer a faster query time for your distinct count calculation, and still be happy so long as a result was close enough (say +/- 2%). In this scenario, the 248,000 number is good enough. The fast result time is far more useful to me than taking 10 minutes or more to say to me it found exactly 248,123 results. If I search for “DAX Distinct Count” in Google, I quickly get back a result telling me it found approximately 248,000 results (in 0.36 seconds). These all perform better (or worse) depending on the underlying nature of the data – but can still quite resource-intensive on CPU & memory. We can also write a precise, distinct count calculation in DAX using other tricks which involve combining various functions such as SUMX, SUMMARIZE, VALUES or DISTINCT etc. However, there are also scenarios where it might be more relevant to prioritise speed over precision – especially when working with large values. There are many scenarios where this is important, such as finance, auditing, healthcare etc. In DAX we have the DISTINCTCOUNT function, which provides a result which is 100% accurate. This compounds as the number of distinct values grow. The process requires more memory along with additional data processing at runtime.

Whereas for a distinct count over a Customer ID column, an internal process needs to keep track of customers already counted to avoid double-counting. The COUNT function is similar to the SUM in that it only requires a small amount of memory and can also be calculated by a single scan operation. If a sales table shows ten transactions per day for a week, a SUM over the QTY column involves a single scan, and only a small amount of memory is required to store and accumulate a value during a single scan. The reason is the extra work required by the engine to produce an accurate distinct value compared with other aggregations such as SUM, MAX and COUNT etc.

The challengeĪs you may know, measures involving a Distinct Count calculation are often the slowest and most resource-hungry measures in your models. To apply this technique to your model, you need to add two additional integer columns (explained in more detail below) along with a calculated measure for every core column you’d like to speed up. DAX based Approx Distinct Count (HLL) versus DISTINCTCOUNT (Baseline)

0 kommentar(er)

0 kommentar(er)